At least on the surface - and quite often on the least suitable or meaningful surface - people around here seem to like order. Admittedly, this "order" often translates into "cut to same size" 1 and "here" is this island north of the European continent geographically, a few hundred years back in time preferably and otherwise rather aimlessly drifting on a sea of... well, let's be charitable for once and call it cluelessness. In other words, current day UK is on the surface committed to maintaining grass and hedges properly trimmed, everything else endlessly and pointlessly -also quite strictly- regulated.

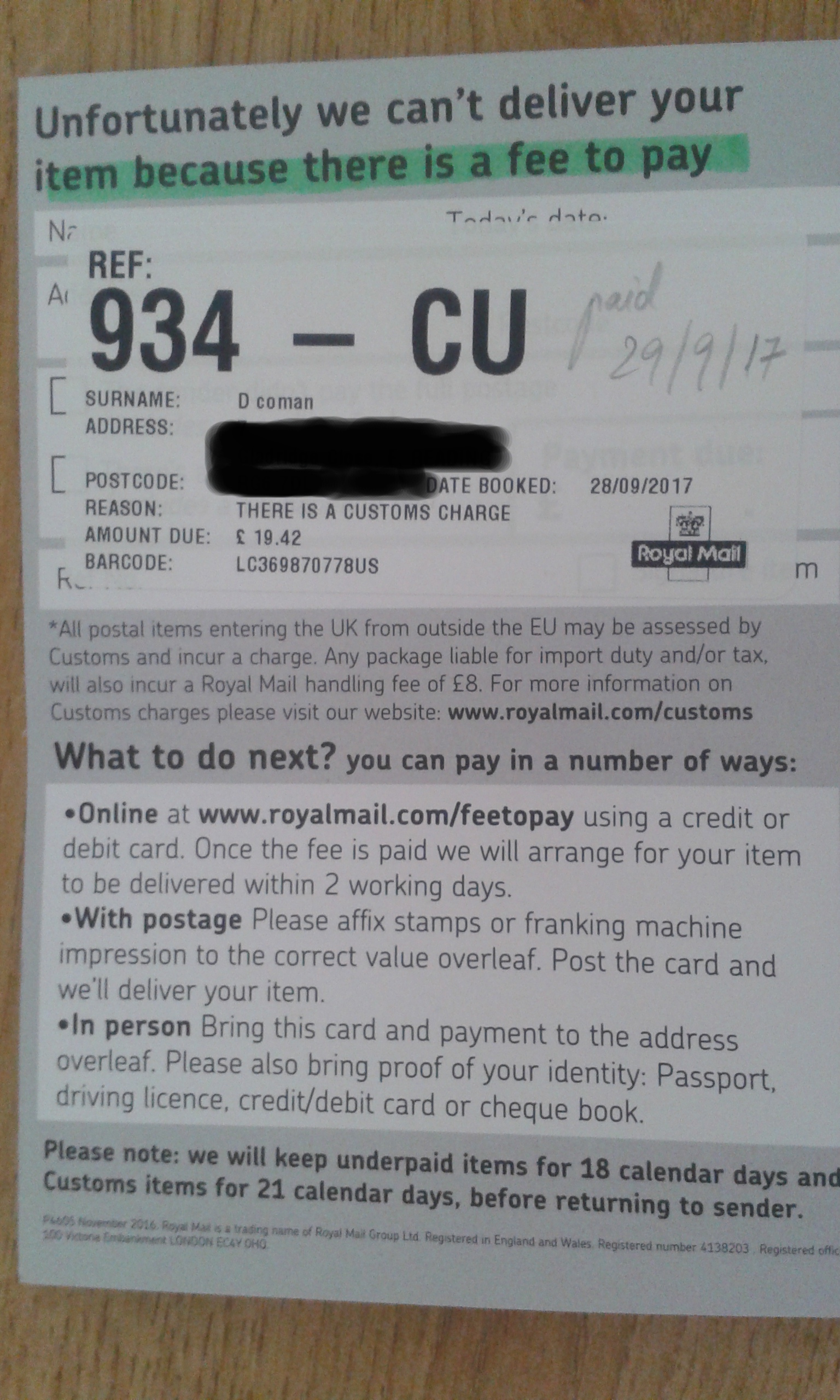

In this orderly environment, I decided to import... randomness. And not any randomness at that, but true and fully auditable sources -yes, more than one- of randomness, long and narrow, fit-in-your-pocket-but-better-plug-in-your-computer electronic boards that go by the name of Fuckgoats and are exquisitely made as well as flawlessly packaged&posted by No Such Labs. Obviously, there was bound to be some bureaucrat to be paid for such disorderly conduct on my part. And so the bureaucrat's card came first instead of the box with my stuff that I had paid for. Hey, it's only piracy if it brandishes the cutlass, says "arrrgh" and wears an eye patch/hand hook/all that. Otherwise it's... bureaucracy, all right and proper dontcha know, the oh-so-Royal Mail's on it too so that makes it doubly orderly and properly, here you go-ey:

Get this: "unfortunately" they can't deliver my item (I guess that acknowledgement of it being mine is an oversight on their part really, possibly soon to be corrected under a more properly red - red in tooth and in claw as nature is, naturally - administration). The reason for this unfortunate event being that... they want some money 2 on this too, what? It's a bag of money passing them by so they *should* get some of it, a bit of it or it's... not fair!

The "what to do next" is particularly funny really in the way it lists "options" that are really the same. What to do next? Well, what, pay, what ELSE? No else, no, we are in the land of the free here where all options are duly listed and properly stamped, devoid of any difference other than in form: do you want to pay online, to pay in person, to pay standing, to pay sitting, to pay through your nose or through your ass? Oh, no, *not* pay is not an option, no, wrong century, wrong planet, wrong currency, wrong, wrong, wrong.

And so, for my sins of importing true randomness to this island of many options that are the same 3, I paid therefore £19.42 for them to split as spoils - pretty much robber-in-the-woods style (if poor robber, yes). Still, they are - of course! - very orderly in *how* they split it, it's all set up and neatly detailed and glued and attached to my box, here you go:

According to those numbers there I paid therefore £11.42 VAT, apparently payable on *anything* with a value above £15 - so anything more than a toothpick and change basically - and coming from outside the European Union. The fact that there is no equivalent of this item that I could possibly buy in the fabled European Union is of no import, of course. Furthermore I also paid £8 to the not-so-Royal Mail for their service in assisting the road-side robbery as it is, as a precondition of handing me my own parcel. Well, it's a hard life by the side of the road and nobody can possibly be in any way all-that-bad if they give you one third of the loot at the end of the day, can they?

Anyways, once ransom was therefore paid to bureaucrats, the box of goodies finally made it into my hands, apparently unmolested too (they simply stuck the paper on top of the box, otherwise the packaging itself looked really too neat to have been the result of *their* efforts). And inside the little box, there stood my brand new and shiny FGs, perfectly packaged in anti-static pouches and bubble wrap. Here's one to feast your eyes on, emerging from its packaging, measured as no more than a third of a usual ace card in width and about one card and a half in length:

And now it's time to go and play with those things of course!

- and that in turn can't ever mean anything *other* than lowest common denominator i.e. bottom of the pit i.e. as shitty as it can get. Nature being very helpful in this direction there is of course no bottom to that pit but that's just... evolution, innit?[↩]

- No, the sum there is not the issue here at all really. It's the principle behind all this that is the trouble and if you think that oh, but it's only a little sum, what's all the fuss about it, then go get fucked already, only a "little" in the ass too as that doesn't matter as long as it's just a little, does it?[↩]

- Hey, I would have bought it HERE if it EXISTED, you know? It sucks to have to import cool stuff from half way around the world, yes, but how does it follow then that one also has to *pay locals* for their failing to have the cool stuff locally?[↩]